Alpha channel

An alpha channel is a part of the image data that describes each pixel’s level of opacity or translucency. Contrast that with the other data, which describes light and color. Many people think of an alpha channel as a transparency mask. However, that can lead one to think of it as rather binary - opaque or transparent - whereas most often an alpha channel has at least 256 levels.

Aspect ratio

Aspect ratio is how the width of a rectangle compares with its height. In other words, wide or tall, and by how much? This is typically expressed as a fraction of width over height. For example, if a rectangle is twice as wide as it is high, we can say it i has a 2:1 aspect ratio. This can get complicated in video where pixels themselves can have an aspect ratio that is distinct from the display aspect ratio. For example, SD NTSC video has a resolution of 720x480 but can be either 4:3 or 16:9 display aspect ratio neither of which are square pixels! Modern video standards such as ATSC and UHD avoid using non-square pixels such that the common HD resolution 1920x1080 reduces mathematically to 16:9, which is also the most common video aspect ratio in use today.

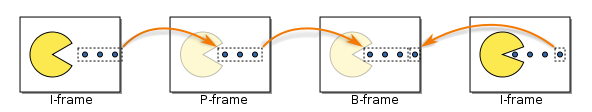

B frames

A Bi-directional predicted (B) frame is a frame of a video that uses the motion-compensated differences between itself and both the preceding and following frames to specify its content. By doing so they use fewer bits to store the information than both I-frames and P-frames.

Bitrate

Bitrate refers to the quantity of data (or number of bits) per unit of time. Most digital audio and video formats use compression to save space, and the compression ratio will vary. For instance, a clear blue sky contains relatively little information and will generally compress well, while a more complex scene will compress less well. Bitrate is also affected by other factors such as the compression algorithm used and the quality level chosen.

Clip

In video editing, a clip refers to a segment of video footage or audio that is a distinct unit within the overall project. Clips can vary in length and content, ranging from just a few frames to several minutes long. They are the building blocks of a video project and can be manipulated, arranged, and edited together to create the final product. Clips can be sourced from various recordings, imported media files, or generated within Shotcut itself in “Open Other…” (ex.: Color clip, Text clip, Animation (Glaxnimate) clip, etc…). Additionally, clips may contain both video and audio components, or they can be exclusively video or audio depending on the nature of the project. Clips are primarily used in the Timeline for editing but can also be utilized in the Source panel and in the Playlist for previewing and organization.

Codec

A codec comprises two components, an encoder and a decoder, hence the name. Examples of video codecs are H.264, H.265, VP9 etc… Codecs use various technologies to compress data. The compression can either be lossless, in which case decoding the data will produce exactly the same data that was encoded, or lossy, in which case decoding the data will lose some of the data that was encoded. The higher the compression the more data is lost. In general the use of lossless codecs result in much larger files than lossy ones. Some codecs are more efficient than others in the amount of data they need to produce videos of equivalent quality e.g. H.265 produces smaller files than H.264 at equivalent quality; however, the more complex methods needed to do this usually mean that they take longer to encode and decode the video.

See also Prologue | Codec Wiki

Colorspace

Color space is about how color and light is represented especially numerically. In video and computer images, the two most popular systems of organization are RGB and YUV (or Y’CbCr). This is a complicated subject area; you can read more on Wikipedia.

Deinterlacer

A deinterlacer is an algorithm to convert interlaced video to progressive scan. See below for definitions on these two terms.

Field order

Interlace video consists of two fields per frame. This term describes which field appears before the other in storage and/or display.

GOP

A GOP, or Group Of Pictures, specifies the order in which intra-frames (I-frames) and inter-frames (B- and P-frames) are arranged. The GOP is a collection of successive frames within a coded video stream. Each coded video stream consists of successive GOPs, from which the visible frames are generated. Encountering a new GOP in a compressed video stream means that the decoder doesn’t need any previous frames in order to decode the following ones, and allows fast seeking through the video. The GOP structure is often referred by two numbers, for example, M=3, N=12. The first number tells the distance between two anchor frames (I or P). The second one tells the distance between two full images (I-frames): it is the GOP size. For the example M=3, N=12, the GOP structure is IBBPBBPBBPBBI.

GUI

GUI is short for Graphical User Interface. As opposed to a Command Line Interface (CLI), which enables users to interface with application only by typing commands, a GUI consists of various widgets (graphical elements such as buttons, scrollbars, color-pallettes etc.) that enable the user to control the application and receive feedback to enable them to make decisions on how next to proceed.

I frame

An Intra-coded (I) frame, also called a keyframe (not to be confused with keyframes used for animating filter parameters), is a frame of a video that is coded independently of all other frames. Consequently they use the more bits than B-frames ans P-frames to store the information. Each GOP begins (in decoding order) with this type of frame.

Interlace

Interlace is a simple form of video compression that uses two half vertical resolution frames to represent a full frame. Basically, you can double the refresh rate for the same data rate. Each half vertical resolution image is called a field. Typically the fields are interleaved in storage and then displayed one after the other on play back by skipping every other line.

Interpolation

Interpolation is the computation of values based on neighboring values. With respect to Settings, it easiest to think of this as the quality level when changing the size of an image. Interpolation is also a term used for animating parameters in Keyframes.

Keyframe

A keyframe defines a specific value or set of values at a specific point in time. The term is used when talking about animating parameter values in Keyframes. It is also used in temporal video compression (so-called delta or P- or B-frames).

Metadata

Metadata is data about another data. In the context of multimedia, the media data (audio/video) is the core data, and all other data in the file is metadata. There can be metadata about the media attributes such as resolution or number of audio channels. And there can be metadata about the context of the media file such as its creator, creation data, title, etc.

MLT

MLT is another open source software project that is the engine of Shotcut. Shotcut is primarily the user interface running on top of this engine. This engine provides some effects of its own, but it also uses other libraries such as FFmpeg, Qt, WebKit, frei0r, lads, etc.

P frame

A Predicted (P) frame is a frame of a video that uses the motion-compensated differences between itself and the preceding frame to specify its content. By doing so they use fewer bits to store the information than I-frames, but more than B-frames.

Progressive

In video, this refers to a scan mode where (“scan” refers to old tube-based TV technology where a cathode ray draws video by drawing lines) each frame of video is a whole picture from a single point in time. This is the opposite of interlace.

Resolution

Display Resolution is the actual pixel size of video and images. Example sizes: 3840x2160 (4k), 1920x1080 (HD), 1280x720 (HD), 720 × 480 (SD), 1080x1380 (Vertical)

Ripple

Ripple means that an operation can affect the clips on the timeline that are later or after the clip being changed. For example, a ripple delete not only removes the clip but also the space it occupies. This requires changing the start time of all of the following clips.

Sample rate

Sample rate refers to how many times per second a sample is taken of the audio or video. For instance, in the real analog world, a car driving down the street moves continuously, but a video of that consists of individual snapshots taken at regular intervals. If you could slow down time and watch the car, the real world car would still move smoothly; however, the video would show the car jumping from one position to another. Common sample rates for video include 24, 25, 30, and 60 frames per second; common sample rates for audio include 44,100 and 48,000 samples per second.

Scrubbing

Scrubbing is seeking by clicking some object and dragging it. Typically this is the play head in the player or timeline. But it can also refer to simply rewind and fast forward playback through media.

Skimming

Skimming is seeking based on the horizontal position of the mouse over the video image or timeline. You press and hold the Shift + Alt keys in Shotcut to enable skimming.

Snapping

Snapping is a setting on the timeline. Aids in sliding two clips together on the same track with no gap in between them.

Scan mode

Scan mode indicates whether video is progressive or interlaced - see related definitions above.

Threads

Threads are a software programming mechanism to let multiple things occur at the same time. Most CPUs now consist of multiple execution units typically called “cores.” Often, these cores support a CPU-based “thread,” which you can think of as a light core (not completely parallel). While it is important that your operating system let multiple things run at the same time to use these CPU cores and threads, it also important that Shotcut run things (i.e. parallel processing) at the same time because media decompression, processing, and compression is very computationally heavy. You can learn more about how Shotcut uses multiple cores and threads in the FAQ.

Timecode

Timecode is a way to represent time numerically. Shotcut uses a standard called SMPTE from the Society of Motion Picture and Television Engineers. It is a display of the running time of video that is frame-accurate yet easier for humans to understand than pure frame count. It shows hours, minutes, seconds, and frames in the format HH:MM:SS:FF. On many video modes that use a non-integer frame rate (e.g. 29.970030 or 30000/1001 fps), the semicolon (;) is the delimiter between seconds and frames to indicate that it is using drop-frame timecode. Drop-frame is a technique to make the timecode follow the real time over long durations. For 30000/1001 fps, drop-frame subtracts two frames every minute except every tenth minute.

UI

UI is short for User Interface. This is the mechanism whereby users interact with the application (Shotcut). The goal of this interaction is to allow effective operation and control of the application from the human end, while the application concurrently feeds back information that aids the user’s decision-making process.

VUI

VUI is short for Visual User Interface, In the context of Shotcut this is an interface that appears in the preview area of the screen when certain filters, like the Text: Rich filter, or the Size, Position & Rotate filter are active. The VUI enables users to manipulate the parameters by dragging handles and/or typing directly in the preset window rather than changing the parameters manually in the filters panel.

XML

XML is a text format that is designed to be both human and machine readable and writable. It is standardize, structured, and extensible - the X in eXtensible Markup Language. There are many dialects of XML, and when one video editor says it reads “XML” it does not mean it can read the XML that another video editor can export. They need to be the same kind of XML. Shotcut reads and writes MLT XML, but at this time it can only fully understand the MLT XML that it writes.