Nice research!

This is great when it’s an option. If the source is blistering sharp high-quality 4K, then the difference between bilinear and bicubic can become very noticeable. Cheap cell phone footage and old HDV home video would be less problematic. Those sources sometimes don’t have enough detail to reveal the weaknesses of bilinear scaling.

Scaling quality could also depend on how big a size change is being done. For a small change, the difference could be unnoticeable. But to enlarge or reduce by a factor of 3 or more would quickly reveal the quality difference.

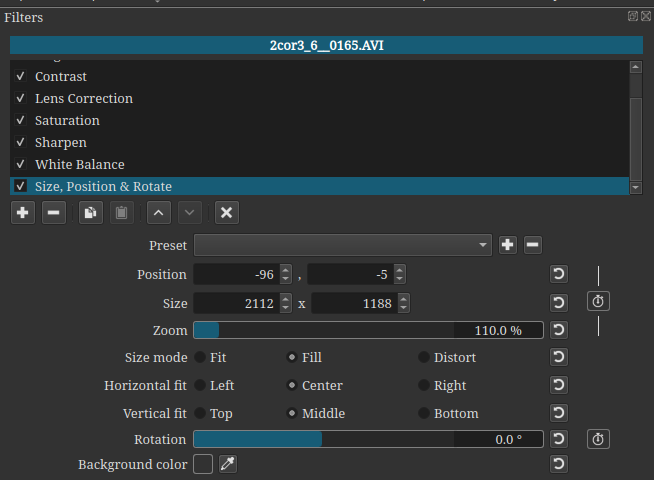

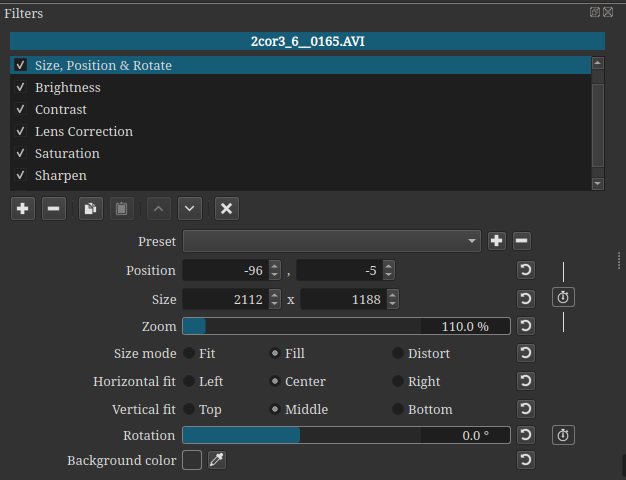

I wonder if this is dependent on “canvas size”. If SPR is shrinking a video down to a quarter of its original size first, then would every filter after it only need to operate on a quarter the amount of data, and that’s where the speed difference is noticed? Less work for the following filters? If SPR is last, then Saturation etc would in theory be operating on full-size videos then their results would be all shrunk down at the end. If this was true, we would expect the final export time to increase if SPR was making a video larger rather than smaller (assuming it didn’t get clipped by the timeline dimensions).

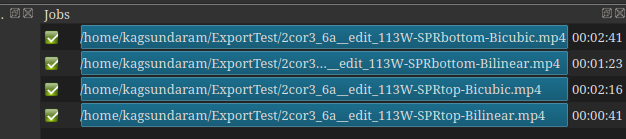

As for the difference between bilinear and bicubic SPR… bicubic is just that complex to calculate and will take about 2x longer compared to bilinear.

Option 4: Shotcut is not written multi-threaded enough to take advantage of all the available CPUs. Some cores sit idle. There’s nothing a user can do to optimize this except buy processors that specialize in fast clock speeds rather than high core counts.

True. But the current version of Shotcut isn’t even using GPU for filters, so the discussion was theoretical and moot. In light of this, buying a beefy GPU for Shotcut currently makes no sense. The only benefit would be 7th generation NVENC for higher quality hardware encoding. But encoding doesn’t use CUDA cores (less than 5% at most), so a cheap GTX 1650 Super is the most money that needs to be spent.

High clock speed is where the party’s currently at for Shotcut exporting. i7-8700K is beast. Good balance between high clock and high cores if doing software H.264/HEVC encoding.

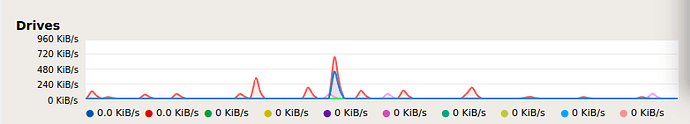

For most people, this will be true. If the input and output formats are highly compressed like H.264, then disk usage is minimal. If intermediate or lossless codecs like Ut Video get involved, they can saturate the disk link quickly since their file sizes are insanely large.