For the sake of anyone else reading along and needing a conclusion to how the Shotcut scopes work and whether there are any problems… The scopes are fine. For gritty details, let’s start from the top:

IRE

IRE is a percentage measurement for voltage (implying an analog signal) where 0 IRE is always 0 mV, and 100 IRE is the maximum protocol voltage (usually the whitest white that can be displayed).

This is very dependent on the video specification being used. For NTSC composite signals, a pure black color is 53.57 mV which corresponds to 7.5 IRE. Note that 0 IRE does not automatically mean black. It only means zero voltage, and the meaning of zero voltage depends on the video specification being used.

Fortunately, BT.709 uses a different voltage range than composite video. In the BT.709 spec, table 6.1 shows that black is signaled by 0 mV, and white is signaled by 700 mV. Since black is signaled by limited-range Y 16, this is extremely convenient and intuitive because black = Y 16 = 0 mV = 0 IRE.

At this point, we correlate that 0 IRE means “blackest black” and 100 IRE means “whitest white” for BT.709.

Next, if we think of analog sRGB data going over a VGA cable, we also see that black = RGB 0 = 0 mV = 0 IRE, and white = RGB 255 = 700 mV = 100 IRE.

This makes the meaning of IRE consistent between these two particular color spaces: zero means black, and 100 means white. It works because sRGB was derived from the BT.709 specification, and they both use their voltage maximums of 700 mV to signal white. This IRE equality wouldn’t necessarily happen with other color spaces.

However, an important distinction is that BT.709 IRE as illustrated so far represents limited-range YCbCr (16-235), whereas sRGB IRE represents full-range RGB (0-255). Yet, they land on the same black and white voltages and trigger the same black and white reference values. This is the beauty of the Shotcut scope. It’s possible to graph and match the brightness of clips from different color spaces and ranges provided the black voltages are the same. It is not necessary to have separate graticules for each range. Even if attempted, the graticules would look the same because max white and max black land in the same places for both ranges, as demonstrated above.

BT.709 vs sRGB

A video stream encoded in BT.709 requires conversion to be displayed on a computer using an sRGB video card and monitor. Errors from rounding, out-of-gamut colors, and inaccurate conversion functions are why using a color picker on an RGB display says nothing useful about the YCbCr stream that generated it. The color picker would collect errors from the conversion algorithm. Note that sRGB technically has two range-dependent transfer functions. Quality editors do the full math, but many programs often generalize the curve with one simple gamma conversion. If that happens, the resulting RGB values will not accurately reflect the YCbCr values underneath (especially for darker colors), nor would it be possible to accurately reverse-engineer the video’s Y value from the RGB sample.

Back to BT.709 vs sRGB… conversion is relatively straight-forward because BT.709 and sRGB have the same color primaries and white point, and differ only slightly in gamut and transfer characteristics.

Full vs Limited Range

The BT.709 specification contains both full-range and limited-range equations, although limited range is the only format allowed for over-the-air transmission and most physical distribution mediums. It’s important to know whether results from equations will be in full range or limited range.

To convert RGB values to BT.709 YCbCr, we use the equations in table 3.5 of the BT.709 specification:

D’y = Round(0.2126 Dr + 0.7152 Dg + 0.0722 Db)

Technically, we would not use table 3.2 because E’ refers to the analog electrical part of the chain, but we’re already in digital domain if we’re converting from RGB. Therefore, we use the digital D’ equations in table 3.5 (since we already have quantized RGB values) and the luminance equation happens to have the same coefficients as E’y by design. But the Cb/Cr equations are very different, and this is significant.

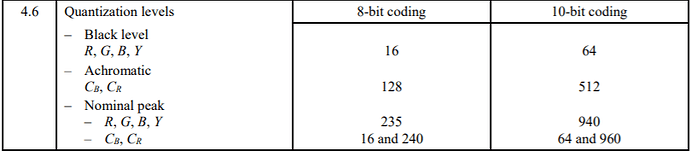

Note that the result of table 3.5’s equations will produce a full-range YCbCr value. As in, RGB 255 pushed through the luminance equation will result in Y 255. In fact, any RGB gray value pushed through the luminance equation will result in an identical value for D’y because the coefficients add up to One (and one times anything is itself). Thus, Y 255 is the way to signal pure white in a full-range video file, and this is the purest form of YCbCr. The math could stop here if it weren’t for the needs of television broadcasters. For their purpose of creating a legalized broadcast signal, we look at table 4.6 which says Y must be compressed (scaled) to 16-235 and Cb/Cr must be compressed to 16-240. This has nothing to do with color purity or conversion theory. Actually, it makes color worse. It’s done because this is a bandwidth precaution taken by the broadcast industry for analog transmissions.

However, Y 235 by itself does not mean white. “Limited range” is a temporary sub-encoding, not a separate and unique specification. To calculate the RGB values needed to activate the pixel components of a TV screen, Y 235 has to be uncompressed to Y 255, where it can then be back-fed through the full-range equations in table 3.5 to get the RGB equivalents. Yes, there are other transforms that consolidate those steps for processing efficiency, but this is what’s happening under the hood.

IRE vs White Point

This is where things gets nuanced, but also very convenient. BT.709 and sRGB use the same white point (CIE D65). For all practical purposes, the following maximal signal values will generate the same visual whiteness on a screen:

- Full-range Y 255 (the result of table 3.5 equations)

- Limited-range Y 235 (compression from table 4.6 applied)

- RGB 255 (assume full range unless metadata indicates otherwise)

Since those are all maximal signals within their specifications, they are also all 100 IRE. And they all look the same because they’re all D65.

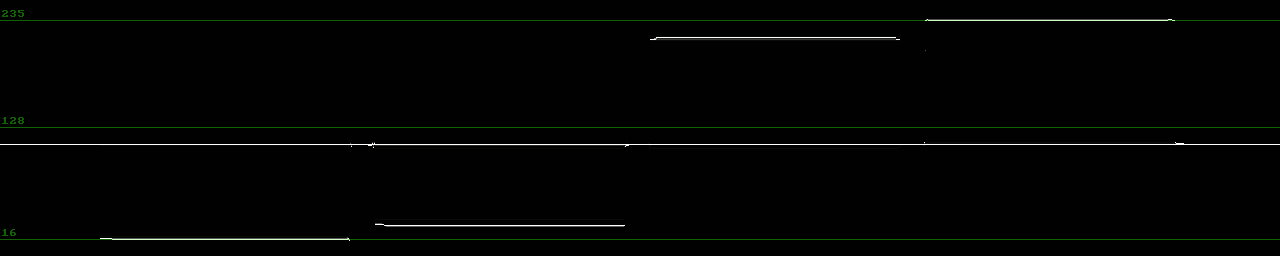

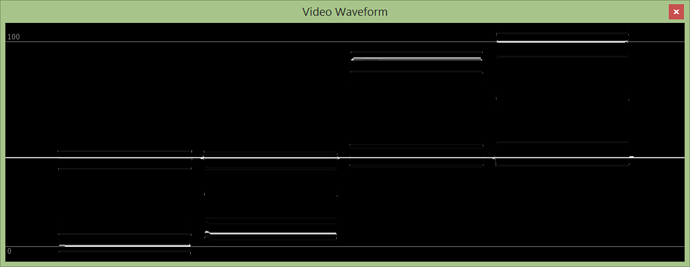

This means that the Shotcut scopes will show Y 255 as 100 IRE for full-range YCbCr, and Y 235 as 100 IRE for limited-range YCbCr, and RGB 255 as 100 IRE for any sRGB input file. This is correct in all cases, because those values represent maximum white (technically maximum voltage) within their specifications. An IRE waveform shows relative voltage, not absolute digital Y values. Y-value waveforms do exist, but this is not one of them. It would be incorrect to label Y 235 as 100 IRE when working on full-range video because Y 235 in full range has yet to reach the white point (maximum voltage).

Since 100 IRE (100 percent voltage) renders as the same white color (CIE D65) regardless of the range, we end up with a waveform graph whose Y-axis has fixed units from 0-100 IRE, but the resolution of the Y-axis is higher in full range (256) vs limited range (220). This requires scaling the waveform if switching ranges, and is why an IRE waveform must change in accordance with how the video is flagged. Technically, a Y-value waveform changes with the flag too by moving the graticules representing black and white points.

Using IRE for the waveform is a convenient way of showing in-gamut max white to max black, which is very useful and portable across sane color spaces. Granted, it’s not perfect because RGB by nature doesn’t have a luminance component. A conversion is happening to plot RGB video on a waveform, but it’s plenty good enough. Now, the day we get another color space where black is something other than 0 mV (0 IRE), then the graticules would land in different places and that would get annoying. But for now, the current waveform is a good “percentage brightness” meter that’s accurate across all supported color spaces. We actually need “percentage brightness” more than we need literal IRE or absolute Y-values when it comes to color matching clips to each other, especially if clips are from different color spaces or bit depths.

So, to say that BT.709 Y 235 = 100 IRE is only half the information. Is it limited or full range YCbCr? BT.709 specifies both. Therefore, color picking RGB 255 off the screen could translate to Y 255 in full range or Y 235 in limited range. Both are valid conversions. Which one is actually in the source video? Having a conversion in the chain (plus ambiguity) is precisely why color picking display-converted RGB values is prone to errors and says nothing about the true YCbCr stream underneath. For a scary example, consider a limited-range YCbCr file that has an overshoot Y 255 in it. Since Y 235 is max white in limited range and 255 was specified, the converted color will be a superwhite with over 100 IRE. But a superwhite can’t be represented on an sRGB display because it tops out at RGB 255. There is no RGB 278 option when Y 255 gets expanded out of its limited range. Therefore, due to clipping, a color picker will only see RGB 255 at most, and not realize that the underlying YCbCr stream had a superwhite. This is especially bad news for broadcast legalizer compliance checks, because supers would pass through unnoticed.

Conclusion

The Shotcut video zoom and waveform scopes are working as they should, and their current implementation is perfect for color matching clips from different color spaces and ranges. There is nothing to modify unless support is extended to color spaces with non-0mV black points, and then a percentage meter might become more useful.

Sources

[1] IRE definition

https://en.wikipedia.org/wiki/IRE_(unit)

[2] VGA voltage

http://microvga.com/faq/electrical/what-are-vga-voltage-levels

[3] ITU-R BT.709 official spec

https://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT.709-6-201506-I!!PDF-E.pdf

[4] ICC interpretation of sRGB

http://www.color.org/sRGB.pdf

[5] sRGB official spec (IEC 61966-2-1)

https://webstore.iec.ch/publication/6168