I plan on shooting a video with two viewpoints, captured by different cameras: 1) a smartphone (4k with VFR, nominally around 30fps) and 2) a DSC (Full HD, fps fixed, selectable: 24/25/30/50/60).

I want to switch between viewpoints (4k for total and HD for closeups) in the project.

It’s a dancing tutorial, so video and audio need to stay in sync as much as possible.

I understand that the VFR footage should be converted to edit-friendly before editing. By default the conversion dialog suggests a framerate of 30, fps. My approach would be to override this in conversion to 30,0000 fps, which should provide least trouble when mixing with 30fps-HD footage.

I hope/assume that audio should stay in sync no matter what fps I choose in conversion.

I wouldn’t care about possible glitches in original audio, since I can overdub the music title as separate audio track anyway. Most important is that switches between cameras maintain sync with audio.

I appreciate your comments if this setup should serve the purpose.

Any other suggestions or caveats?

Thanks.

Audio recording and video recording are separate, so video properties like framerate etc, do not effect audio in any way. Audio uses sample rate and bit depth to record audio, most common ig 44.1 KHz or 48 Khz and 16/24 bits,

In video framerate is how many pictures is used to per second, but a second is always a second, 1FPS or 1000FPS do not matter, where FPS matter is when there are movement between each picture, if the FPS is to low our eyes will detect that the movement is not continuous, the reason to record in higher FPS, it can be slowed down (slow-motion) and still have a number of pictures to cheat our eyes to see a smooth motion.

Changing the speed of a clip, will also change the speed (audio) of the audio and it will sound very strange, for minor speed changes it can be improved with pitch corrrection, but will not sound like the original audio.

When using multiple camera, you normally only need to record the audio once, no need to record audio on multiple devices.

Only if you record audio on a separate recorder, then it can be good to record the audio in camera also, just to make it easier to align the audio from the audio recorder with the video from the camera.

I many cases you don’t need to record audio at all because you add music, sfx, voice over in the editing.

But if you are recording a live event like a consert, you need to record the audio, I the best quality possible, because we are much more sensitive to bad audio, than to bad video quality.

When using multi cam, it is a good idea to have a visual clue like clapping you hand infront of the cameraes, so you can align the footage from the cameraes, before starting to cutting the footage, so they are in time.

Thanks @TimLau for these considerations. This goes along with what I thought.

The footage from the full HD camera is most likely 29.97fps rather than an even 30. I mention this in case you need to convert it to edit-friendly as well. Most NTSC-compliant cameras use the fractional frame rates. Newer ATSC equipment can use integer frame rates, but a full HD camera probably predates those standards.

Having said that, the iPhone attempts true 30fps (although VFR does whatever it wants), so I would recommend converting it to 30fps as you planned. Mixing the two frame rates on the timeline is fine. Shotcut will drop or duplicate frames as necessary to make them align.

I’m going tot throw this out there just as another option: you can probably get away with not converting at all and just editing + exporting as-is. 30 and 29.976 and variable ~29.976 are close enough that they won’t be noticeable (compared to 25 vs 30 vs 50 fps) and for the variable framerate smartphone video - they usually film quite well nowadays*, enough that it’s not worth the conversion time with the best frame creation method (for me at least).

*I mention nowadays because I used to see very often out of my very old Galaxy S2 drops in framerate towards 5fps (because smartphone cameras were abysmal 10 years ago…). Actually I can mediainfo some right now:

Just some casual 7.3 to 125 fps ?! (altough I think this is a bug or some very wrong timing)

You are right - I only looked at the video setup in the camera (available framerates), but looking at existing videos, Mediainfo reports indeed 29,97 fps (it’s a Fuji X30). Since framerate is fixed I never got prompted to “convert to edit-friendly”.

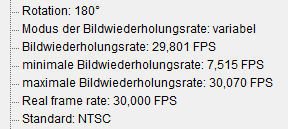

checking the 4k videos shot with my 2020 Google Pixel4a I frequently see framerates varying in the order of just 1 frame around 30fps - but I also spotted some with a min of less than 10fps (just checking a few):

what might be the meaning of the “real frame rate”?

Apparently it’s the intended frame rate of the recorder, it’s target was 30.000 but due to processing power or other hardware decisions it only got to 29.801.

There’s also some usage in slow motion/timelapse capturing (from what I’ve googled) where this would be very different from the captured frame rate.

This topic was automatically closed after 90 days. New replies are no longer allowed.