AMD good. Intel bad. LOL! ![]()

![]()

![]()

Thanks for the tip, @Austin! If I invest in big disks so I can actually use lossless intermediate files, now I know they need to be blazingly fast big drives.

Sidenote - My Setup:

[HP Compaq 6305 Pro Small Form Desktop,

AMD Quad Core A8 5500 3.2Ghz,

8GB DDR3,

480GB SSD Hard Drive,

GeForce GT 710,

Kubuntu Linux 18.04

Cameras, (at the time this video was recorded):

Nokia Lumia 920 cellphone,

Nokia Lumia 830 cellphone,

cheap Chinese GoPro-clone from the WalMart clearance table,

Panasonic HDC-SD80

The experiment here is taken from a remastering session; I am producing an edit-friendly intermediate file with the best audio from the Panasonic overlaid on the GoPro-clone video to get one of four simultaneous views for final editing.

Sidenote - My Process:

(1) shoot three or four simultaneous videos, tripod mounted cameras.

(2) Produce that many time-synchronized intermediate mp4 files, equal length, audio sync problems corrected, flesh-tones color matched, camera-angle problems corrected, etc.

(3) Final edit from the intermediate files.

Before proxies, this was the only way to avoid the horrendously slow rendering during editing with all filters in place. I had tried using the semi-automatic edit-friendly file conversion in Shotcut when it was introduced; I was not satisfied with the results, so I began to do my own.

Unfortunately, there is a ton of nuance to this. There is a difference between the number of threads used to generate a frame (for filters and compositing), versus the number of threads used to encode a frame (by libx264). If encoding with H.264/HEVC, the encoder will saturate any remaining CPU and make it look like it’s being used well. But Shotcut itself may only be using a single core to generate the frame.

The easiest way to verify this is to use an export codec that is near-zero CPU load, like hardware encoding or libx264 with preset=veryfast and then we can see the cores and threads used to generate a frame.

The other nuance is which filters are used. Some thread very well. Some like “Reduce Noise: Wavelet” only use a single thread (but it’s awesome at its job so I use it anyway). Using a zero-load encoder is the main way to tell what Shotcut is really using, as opposed to what the encoder is using.

Since you noticed your CPU usage at 70% instead of 100%, that tells me the encoder is waiting for a frame to be generated and is sitting idle.

This used to be true. GPU was disabled many versions ago due to instability. It can be manually turned on, but few people do it. Not recommended. Those were probably old discussion topics you found.

Whatever makes you happy lol. ![]()

For real-time editing, this would be true. If you edit with proxies, the proxies are low disk usage and there is no speed issue with affordable giant magnetic drives. I still use large magnetic HDDs since I use proxies. Proxies are amazing.

Interesting! Does this mean a multi-cam setup of one event, or shooting several events back-to-back?

“manually turned on” - how?

I want to play!

Three tripods, up to five cameras (a piggy-back and a double-mount bar).

For example:

Thank you.

As I said, I am learning this week.

I will experiment.

(This means turning off my browser for a little while. Be back shortly.)

Oops, I forgot to clarify why the core usage pattern was significant.

If somebody is using libx264 and it saturates the CPU, then they’re exporting the fastest their computer can do and there is nothing further to optimize.

But if someone is using a codec that doesn’t require a lot of CPU, like DNxHR or Ut Video, then all the CPU bottleneck falls on how fast Shotcut can generate a frame (filters and compositing). This is why some people see 16 threads used (they exported with libx264) while other people see only 4 threads used. They maybe used Huffyuv which has near-zero CPU overhead, and the bottleneck was frame generation due to a single-threaded filter.

You found good tips! My main reason for responding to threads like this is to help people understand what hardware makes the biggest difference so their gear budgets are spent wisely. The worst thing is being disappointed about a performance boost someone hoped to see but didn’t get after spending a bunch of money (in the wrong place).

Absolutely!

More experiments.

More screenshots are being uploaded to the Dropbox file mentioned above.)

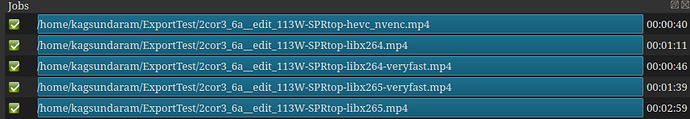

All with Hardware Encoder UNchecked.

hevc_hvenc runs all four threads at 75% (about the same as with the Hardware Encoder)

All of the libx264 and libx265 run all four cores at 100% or nearly so.

hevc_hvenc is still the fastest. I wonder why it is the same with or without hardware encoding turned on?

This is the hardware encoder. If the checkbox is checked, it recommends this option. If it is unchecked but the user manually picks this option, hardware still gets used. That’s why results are identical.

Since this is a hardware encoder, the CPU is free of encoding and used basically exclusively by Shotcut for frame generation now. The fact that it isn’t 100% CPU usage means a filter or something is not threading well. If you had a 24-core box, it probably wouldn’t do much better because the additional cores would likely sit idle. They aren’t all being fully used even at 4 cores.

thought so

Thanks.

Oh dear.

It looks like I need to put my software engineer hat back on, learn yet another genre, and fix the multi-threading in the filters I need for everyday use.

Well, I have plenty of time; it will be quite a while before I can afford to upgrade to Threadripper.

I have found the answer to my question.

“No”

The NVIDIA X-Server Settings screen never shows higher than 10% PCIe Bandwidth Utilization during the bottnecked (75% CPU Usage) time periods.

PCIe bandwidth is NOT the culprit.

Percentages here can be confusing; I was seeing 20% “Video Engine Utilization”, which I believe is the same measure (I cannot seem to find any documentation to confirm or refute this assumption), yet I think now we are both seeing the same thing.

Using 40 CUDA cores to encode would be 5% on a GTX 1650, but it is over 20% on my dinky little GTX 710.

Good point on percentages, sorry. I was referring to recent cards.

CUDA is mainly used to calculate B-frames. If B-frames are turned off, then CUDA doesn’t get used at all. Encoding would happen inside the separate and dedicated NVENC circuitry.

The Shotcut user interface is also using GPU to display preview video and draw some UI controls. It uses OpenGL or DirectX surfaces. Any other running programs doing a similar thing would also register GPU usage. So it’s difficult to tell what amount is actually going to encoding.