It’s always great to see you make one of your thoughtful posts, @Austin.

Yeah it’s cool as long as Shotcut doesn’t use that as the groundwork to have no lag because that’s basically the Premiere Pro method. Premiere Pro is still very CPU heavy and the whole select an area to render feature is their way of dealing with lag that doesn’t involve the GPU. It was cool back then but nowadays this isn’t talked about so positively when compared to the likes of Final Cut and Resolve where playback is always fantastic. This feature though would be very cool though as an option especially for those using computers with low specs but I would hate to see Shotcut rely on something like that in the way Premiere Pro does because that’s not the current standard and expectation.

I was thinking about KKnBB’s suggestion again and got an idea. Granted, I am not a programmer at all so I don’t know if this is even possible and if what I am going to write is nothing but fantasy but this is just an idea. Instead of caching or rendering random chunks of the timeline based on wherever the playhead was, can a system be programmed so that it would prioritize what it would cache based on the task happening in the timeline? For example, if a transition is created, then that gets cached right away. If a section of the timeline has layered videos, images, text, etc… that gets cached right away. If a section of the timeline has effects like Gaussian Blur with some distorted video effects then that gets cached right away. Since those kinds of areas are where lag would really be an issue then those would get rendered with higher priority. If that could be achieved then it could save space since Shotcut currently doesn’t have a problem with lag when all you want to do is just play a video.

I imagine that my suggestion, if it’s even possible, would take a tremendous amount of coding so I don’t want to undermine whatever work it would take to bring anything like that to fruition. But if a cache system were to be implemented then it would be amazing if it could be a smart system of some kind.

According to this page: FCP X: Render Files, Exporting and Image Quality | Larry Jordan

the default render is ProRes 422 with an option to change to other ProRes formats that include ProRes HQ.

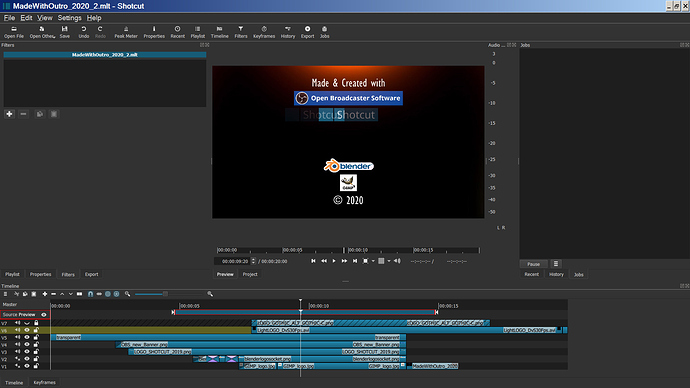

That page includes some screenshots of the options in Final Cut. It seems that Final Cut includes the options for the cache in the same place as where you would first set the project video mode, name, etc… So if a cache system were to be implemented in Shotcut it wouldn’t be a bad idea to add that to Shotcut’s current start screen along with its project name and video mode settings.

From the same page I linked above, the author writes this as how Final Cut handles caching vs export settings:

HOW FINAL CUT PRO X HANDLES RENDER FILES

When the time comes to share (export) a file, there are three ways Final Cut will handle render files:

1. If the render files exactly match your export destination codec (for example, a project with ProRes 422 HQ render files exporting to a ProRes 422 HQ master file), then the render files will be used. That is, the frames are simply copied to the final file.

2. If your export destination codec is one of the 6 ProRes codecs, or one of the two uncompressed 4:2:2 codecs, and the render files don’t match, Final Cut treats the timeline as if it was unrendered. In other words, it goes back to the original/optimized/proxy files rather than use your render files. This is similar to the way Export Movie worked in FCP 7.

3. If the final delivery codec is of a lower quality than the render files, then Final Cut transcodes directly from the render files during share. This preserves original quality (one of the main purposes of ProRes) and insures that the final output is finished as quickly as possible.

I really like how Apple’s engineers have solved this problem. It means that if my render files don’t match my final output, Apple will use the highest quality when creating a master file or the fastest option when compressing a file for the web.

That sounds like a model to use as inspiration. He also wrote this earlier in his article:

Keep in mind that FCP X makes use of all the processing resources in the system simultaneously (GPU + CPU). In other words, Final Cut has the GPU doing effects and image processing, which offloads work from the CPU so it can focus on encoding. So with both GPU and CPU working together in parallel, Apple has made huge improvements in the export speed compared to FCP 7, or other applications that are not so tightly integrated into the Apple hardware.

That matches an answer I found on Quora when searching online to find out about Final Cut’s speed:

1. It is a 64 bit application, able to access huge memory spaces.

2. It is architected to use all available cores. If your CPU has 8 cores, FCPX will use them all - unless you are using one for something else.

3. It does background rendering, so you can continue to edit as it is rendering.

4. It is architected to take advantage of the GPU.

So Final Cut uses all 3 resources in concert: All cores of one’s CPU, the GPU and caching/rendering. Shotcut should aim for that.

I personally use an external hard drive with a decent amount of terabytes on it as a scratch disk. So the ability to choose where you could store cache files would be great because then I would choose that external hard drive and it wouldn’t bother me. However, for those that can’t have that, maybe granting the ability for the user to manually set what and when to cache on the timeline (ala Premiere Pro) could be an option?

Also, the whole cache system should be an option in general not the basis of the video editor. Using all CPU cores along with the GPU should be the basis. So if a user simply doesn’t have the space for caching/background rendering, but has lots of CPU and GPU power then they would make sure the background rendering is off to rely on the CPU and GPU. If those aren’t an option either then that’s what the proxy workflow and preview scaling options would be there for.

Yeah, this is why I say that background rendering should be an option not the basis. If it’s an issue like that then it would be a waste of time for the user to wait for caching if they have sufficient CPU and GPU power. If those don’t hold up either then they could just use a proxy workflow and set the proxies to some low render that would produce the proxies fast.

I found another short article on Final Cut’s background rendering: https://anawesomeguide.com/2017/09/26/fcp-x-background-render/

It’s basic stuff but since I never used Final Cut, I found it to be an interesting read especially with the screenshots of its background rendering options. Maybe others might find it useful too.