TL;DR… There is nothing to worry about. Shotcut and the other lossless codecs have you totally covered.

Now for the details…

I wish I knew this about you from the start.  It provides context to everything.

It provides context to everything.

In a VFX pipeline, it is very common to work entirely in RGB/A. For motion pictures and other large productions, it’s even more common for that RGB space to be linear and be exchanged as EXR or DPX image sequences rather than video files. As you’re probably aware, this is exceedingly specific to the VFX workflow. But before and after the VFX integration, it’s a completely different world when it comes to encoding video, and out there, the only common formats are YUV or some variant of RGB RAW (like CinemaDNG or BRAW). RGB – as in sRGB to distinguish it from linear or RAW – will rarely exist outside of 3D animation or motion graphics renderings, or screen recordings of video games captured by OBS Studio. Basically, RGB is for stuff that’s generated by a computer as opposed to video that’s captured with a camera.

Before answering your other questions, we should first double-check your source video. The pixel format was gbrp, meaning it’s an RGB video. But how did it get to be RGB? Was it generated by a computer like a rendering or screen capture? Or was it converted to RGB from video captured with a camera? The reason it’s significant is because you wanted absolutely zero loss due to compression. However, if the source video was YUV from a camera and then converted to RGB for Lagarith, then loss happened right there in the YUV-to-RGB conversion. Granted, the loss won’t be terribly noticeable and the loss is probably minimal since the camera already converted an RGB Bayer filter to YUV in the first place, meaning the camera chose YUV values that will map the closest back to the original RGB. However, if the RGB conversion was done later with software that isn’t perfectly color correct, then there will most definitely be loss in every YUV-to-RGB conversion and back. There is also an issue that the YUV color gamut is larger than RGB, meaning RGB will lose color data if transcoded from YUV, which will turn into noticeable banding and possible generation loss if you apply heavy color grading.

This is why all the lossless codecs mentioned in this thread support both YUV and RGB variants. The output format must match the input format in order to avoid a conversion loss. You can do everything as RGB in Shotcut just as you’re doing in After Effects, but there is potential for loss even with RGB in both workflows and it’s up to you to decide if you’re okay with that. You haven’t noticed a problem with After Effects apparently, so in theory you should be fine doing the same workflow in Shotcut.

All that aside, let’s get down to editing…

Since your input is RGB, your life will indeed be much more simple than the average person using Shotcut who has to deal with YUV. Lucky you!

You’ll be relieved to know that when you drop an RGB video onto the timeline, the color range drop-down box for Limited vs Full has no effect. Those options are only relevant for YUV video. To prove this for yourself since it rightfully concerns you, change between the two options and notice that the preview window doesn’t look any different either way. Then drop a YUV video onto the timeline (a video from a cell phone will suffice) and change between the two options. The colors in the preview window will have a radical shift. Limited vs Full is ignored for RGB video because it’s a meaningless concept to RGB. @shotcut, to @Lagarith’s point, it would be nice if Shotcut automatically set the color range drop-down box to Full if the source video is RGB. Not sure if that’s an option or even a good idea, but it sounds logical in theory.

At this point, editing can be done care-free just like you would normally expect. No need to double-check Shotcut’s interpretation of your files because RGB is RGB is RGB. Simplicity is a feature of RGB and has nothing to do with Lagarith.

Now we get to exporting. The easiest way to have Shotcut export as RGBA is to choose the Alpha > Ut Video option from the list of stock export presets. If you choose that preset then go to the Advanced > Other tab, you’ll see these lines in it:

mlt_image_format=rgb24a

pix_fmt=gbrap

The first line tells Shotcut to use an internal RGBA processing pipeline. The second line says the output video format should also be RGBA (as opposed to converting to YUV). If you want RGB without an alpha channel, change those two lines to the following and optionally save it as a custom export preset if you’ll use it often:

mlt_image_format=rgb24

pix_fmt=gbrp

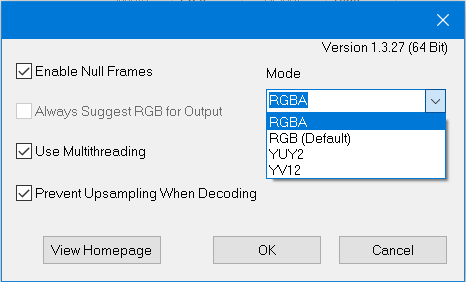

That’s all there is to exporting as RGB. The same concept works for all the lossless codecs, including Lagarith. The simplicity of Lagarith was due to being in RGB, not due to any magic of the codec itself. Lagarith also has YUV options (they were called YUY2 and YV12 in your screenshot) that are identical and every bit as complicated as the other codecs. They’re all the same. Lagarith has no more common sense than any of the other codecs. It’s just RGB that is arguably more common sense than YUV for everything except color gamut and disk space usage.  I demonstrated Ut Video as a possible replacement to Lagarith simply because it has an export preset already defined in a default installation of Shotcut. You could just as easily use HuffYUV or MagicYUV. Although when you’re in RGB, there’s no particular reason to favor one over the other except that Ut Video is the most actively maintained and continually optimized in the ffmpeg code base. And you’ll be happy to know that since RGB doesn’t require color metadata due to its simplicity, you can use an AVI container without any worries or caveats at all. You don’t have to add Matroska MKV files to your life with RGB.

I demonstrated Ut Video as a possible replacement to Lagarith simply because it has an export preset already defined in a default installation of Shotcut. You could just as easily use HuffYUV or MagicYUV. Although when you’re in RGB, there’s no particular reason to favor one over the other except that Ut Video is the most actively maintained and continually optimized in the ffmpeg code base. And you’ll be happy to know that since RGB doesn’t require color metadata due to its simplicity, you can use an AVI container without any worries or caveats at all. You don’t have to add Matroska MKV files to your life with RGB.

The world of video encoding is dizzying, I get it. The glory of Shotcut is that it gracefully allows the importing of videos in any format, any bit depth, RGB or YUV, interlaced or not, any frame rate, drop frame or not, any color space, and any color range all on the same timeline within the same project, and gives you the power to tweak any settings that were not guessed correctly when provided with files that are malformed or incomplete. These formats that are so understandably ridiculous and full of compromises in the eyes of VFX guys are unfortunately the bread and butter of the broadcast industry and the distribution markets (like DVD and Blu-ray). There are numerous people in this forum who work in broadcast studios and use Shotcut for its incredibly forgiving and versatile treatment of any format thrown at it.

For a documentary filmmaker receiving video files sent in from all over the world, Shotcut is the coolest tool out there for being able to drop all videos immediately onto a single timeline. By comparison, if you wanted to edit video in Blender or Lightworks, all the source videos would have to be transcoded to a common frame rate and color space before they would play nice on the timeline. Meanwhile, Shotcut can get to work directly on the original files and interpolate transparently. It’s a thing of beauty. It’s actually a little unfortunate that the documentation and the “marketing arm” of Shotcut does not emphasize these features more prominently. The abundance of options (including the nonsensical ones) are actually raw unlimited power in the hands of people that have need for it.

You’ll also be glad to know that if YUV sources ever enter your workflow, Shotcut actually makes very intelligent guesses when metadata is missing. Defaulting to MPEG color range for YUV files is industry standard even today. Most containers like Apple’s MOV don’t even have a flag to signal full range because that’s just not a thing in a professional YUV workflow. It’s really just consumer devices like cell phones and DSLR/mirrorless cameras that create full-range YUV in MP4 containers, and MP4 has specialized metadata to signal it that Shotcut knows how to read.

Hopefully those details are able to set your mind at ease about the way Shotcut interprets your videos. If an RGB pipeline was good enough for you with After Effects, there are enough details here to create an RGB pipeline with Shotcut too and get identical results, albeit with a codec other than Lagarith. You should be able to hit the ground running at this point. If not, reply with the next hurdle and we’ll see what we can do for you.