The O.P. was interested in ProRes but I’ll add UtVideo to the list as well.

Shotcut 18.12.15 BETA

Lossless UtVideo using preset/default 4:2:2:

Original: R-G-B 16-180-16

Output: R-G-B 17-179-16

Within one digital value for R, G and B.

ProRes using default/preset:

Original:

R-G-B, 17-179-16

Output

R-G-B, 17-179-16

Note: this is slightly more accurate than previous Shotcut version.

Alpha UtVideo using preset/default rgb24a

Original:

R-G-B, 17-179-16

Output

R-G-B, 17-179-16

Thanks, chris319.

Is that because the new Ut lossless preset is using the yuv422p format or could it still be more accurate?

After this, does this take care of the color issues you had with Shotcut?

@shotcut, did you fix ProRes’ colors for this beta after chris319’s report on it here? ![]()

I don’t know what difference the answers to these questions makes. There will always be a small amount of rounding error due to the use of floating-point coefficients in BT.709. In my experience +/- 3 digital values in 8-bit video is as close as it gets.

I have not found any other color errors in the latest beta version of Shotcut.

My first question was me asking because I am not tech savvy.  The output numbers you noted there for ProRes and the Ut rgb24a preset were identical so I was wondering if the difference in numbers with this beta’s Ut Lossless preset meant it was still off.

The output numbers you noted there for ProRes and the Ut rgb24a preset were identical so I was wondering if the difference in numbers with this beta’s Ut Lossless preset meant it was still off.

My second question was me wondering if this once and for all settled the color issues that were you noting Shotcut had which is why this thread exists in the first place. You were also talking about a separate program you were working on in regards to the colors I believe.

My third question is for Dan.

I found better swscale flags to use for RGB to YUV conversion. From the release notes:

- Improved color accuracy of internal RGB-to-YUV conversions.

shotcut:

Your attention to color accuracy is very much appreciated.

Sorry I didn’t reply sooner, chris319. The answer is contained in the write-up I’m doing on optimal proxy codecs within Shotcut, which I had hoped would be done long before now. But it’s going to be delayed a little more because I recently discovered DNxHR is available as a profile under the DNxHD codec, and I want to give DNxHR a chance to face-off with ProRes.

The highly subjective “3x sharpness gain” figure is based on a colorist workflow. If colorists are going to grade on proxies, they need to see clear distinction between shadow, midtone, and highlight to know what the grade will do to the full-resolution originals.

When I did a default scale of 4K or 1080p footage down to 640x360 or smaller, there were so many pixels averaged together in the downsize that all contrast within those averaged pixels just blurred together. A colorist cannot grade on mush.

By using the highest quality scaler along with dithering (where useful) and unsharp masking, the distinction between shadow, midtone, and highlight comes back to a usable level. Since we’ve gained in essence three colors (contrasts) where there was originally just one blurred color, I subjectively called that 3x sharpness gain. It is not scientific at all, which is unusual for me, but I’m not aware of any other way to quantify sharpness comparisons between images of different resolutions. If someone disputed my logic, I would probably agree with them.

Update: I was still using zscale when I wrote that 3x figure, before running into the Red Shift Problem. Now that I’m using libswscale instead, I would say the post-processing chain achieves 2.5x sharpness gain over defaults. zscale does hold better color at high-contrast edges when it works, but it doesn’t always work. In fact, throw a patch of bright red in the image and scale down to really small in 4:2:0, and it will always fail.

So “scale” is preferable to “zscale”?

You can check horizontal luma resolution by emulating a square wave as in this test pattern. Keep in mind, though, that 4:2:2 will drop every other chroma sample horizontally and 4:2:0 every other sample horizontally and vertically.

The scale vs zscale situation is very situational.

The zscale bug only happens in 4:2:0 or 4:2:2. If you’re able to up-sample or operate in 4:4:4 or RGB, it’s fine.

If the zscale bug was fixed, I would be back to it before nightfall.

For the case of creating small proxies in 4:2:0 like I’m doing, it kinda makes scale the winner by default because zscale disqualified itself. But for photographic purposes in RGB, zscale would be fine.

Here’s an animated GIF I put together to demonstrate the problem. Sorry the source video is not a great work of art, but this is the video where I happened to notice the problem in the first place during testing.  The source video was 4K and I scaled it down to the proxy sizes shown at the top of the GIF, then blew them back up to full-screen preview and took crops of the red fire hydrant. Notice how the left edge of the hydrant shifts. Naturally, the smaller the proxy, the bigger the shift appears when expanded up to full-screen preview. The shift of the red plane is so severe that it changes the overall brightness of the entire image. The 4:4:4 version is the only one that’s true to the source.

The source video was 4K and I scaled it down to the proxy sizes shown at the top of the GIF, then blew them back up to full-screen preview and took crops of the red fire hydrant. Notice how the left edge of the hydrant shifts. Naturally, the smaller the proxy, the bigger the shift appears when expanded up to full-screen preview. The shift of the red plane is so severe that it changes the overall brightness of the entire image. The 4:4:4 version is the only one that’s true to the source.

Whether that shift is enough to discourage someone from temporarily using zscale totally depends on their requirements.

The animated GIF above needs to be full screen to really see the effect.

This image can also illustrate the 3x sharpness thing I was talking about in the other thread. Comparing the grooves on the top of the hydrant between the 384x216 picture and the 960x540 picture, there is a clear separation of shadow, midtone, and highlight on the 960x540 version. Quality scaling plus unsharp masking brings back that detail at 270p and above, whereas a default scale looks more like the 384x216 version at pretty much all proxy resolutions. Not scientific, and probably a number I’ll never repeat again because of it, but now you can see what I was referring to.

Austin -

Can you please give a brief capsule summary of what you are doing?

I don’t understand about proxies

Whatever you were doing was pretty brutal on that fire hydrant.

I’m beginning to wonder how much grading needs to be done if a camera is properly set up to begin with and the lighting is good. I come from several decades of live broadcast TV. Live means it has to look good from the outset. There is no opportunity to fix things in post because there is no post. Multiply if you have to match 3 - 6 cameras to be intercut live.

Sure. Suppose you’ve got dirt-old hardware like I do and it cannot play back 4K source videos without stuttering. Or suppose you’ve got top-of-the-line hardware and it still stutters because you’ve decided to shove 8K video through the CPU. The basic premise is that the CPU cannot keep up with decoding, filtering, and compositing all the videos for a real-time preview.

Proxies get around this by creating duplicate videos at a smaller resolution. If the source is 4K and I generate proxies at 640x360 and I edit on the proxies, then the CPU has 36 times less data to process. With that much reduction in processing, the preview bounces back up to real-time and editing is smooth. When it’s time for the final export, I swap the original videos back in for processing and maximum quality is maintained.

The whole scale-vs-zscale thing comes into play when downsizing videos to make proxies.

I have attached a Lossless H.264 video with a single frame in it, which is a crop of the fire hydrant from the original 4K video. Below are the ffmpeg commands to generate a proxy using scale and zscale, then resize the proxy back up to 1080p full-screen preview using scale (because Shotcut resizes previews using scale, not zscale). The dimensions are weird numbers because we’re looking at a crop of a larger frame. But the bottom line is that the proxy is 10x smaller per axis, and zscale falls apart when downsizing by that much in 4:2:0 or 4:2:2. Compare the PNGs that are generated by the ffmpeg commands to see the shift happen.

As for the virtues of color grading in the first place, there are two different environments involved. Broadcast live television, as you are accustomed to, is more a matter of color correction because the goal is to look accurate. That’s where WhiBal gray cards and X-Rite Color Checkers and meticulous lighting come into play, and that works great for that environment. Color grading meanwhile is the art of intentionally distressing colors in post-production to create a more dramatic look. Extreme grading might even wholesale remove every color except three to create an extremely focused tri-tone image. I point to the infamous and overdone orange-and-teal look we see from so many Hollywood movies. Natural lighting cannot achieve the same look because the image is purposefully distressed into impossible color and lighting situations to grab attention by its peculiarity. For people working in narrative filmmaking or similar environments, having color-accurate proxies is an absolute requirement to speed up the workflow. This is the feature I want to bring to Shotcut.

And now for the ffmpeg commands. And yes, zscale still has Red Shift if lanczos is substituted for spline36.

MAKE ZSCALE PROXY

ffmpeg ^

-i “Hydrant 4K Crop.mp4” ^

-filter:v ^

zscale=matrix=709:primaries=709:transfer=709:range=limited:^

width=-2:height=90:filter=spline36:dither=ordered,^

unsharp=3:3:0.20:3:3:0.20,^

format=yuv420p,^

scale=width=-2:height=454:sws_flags=bilinear:sws_dither=none ^

-colorspace 1 -color_primaries 1 -color_trc 1 ^

-f image2 “Hydrant Proxy with zscale.png”

MAKE SCALE PROXY

ffmpeg ^

-i “Hydrant 4K Crop.mp4” ^

-filter:v ^

scale=out_color_matrix=bt709:out_range=limited:width=-2:height=90:^

sws_flags=lanczos+accurate_rnd+full_chroma_int+full_chroma_inp:sws_dither=ed,^

unsharp=3:3:0.20:3:3:0.20,^

format=yuv420p,^

scale=width=-2:height=454:sws_flags=bilinear:sws_dither=none ^

-colorspace 1 -color_primaries 1 -color_trc 1 ^

-f image2 “Hydrant Proxy with scale.png”

Hydrant 4K Crop.zip (338.6 KB)

Good gravy. Sounds like the tail wagging the dog. No wonder those videos look like mush.

Maybe you could save up for a new computer? Normally I would suggest Mac because Microsoft is really struggling with problems in Windows 10, but if finances are an issue then PC it is, preferably running Windows 7. Or you could take a flying leap on Linux.

What do you need 4k for anyway? Is this for theatrical release? YouTube is going to downsample it and it can’t be broadcast, so … ? And what are you going to view this on with 4k resolution? If it’s for theatrical release then you must be really strapped financially to be using Shotcut, no offense intended.

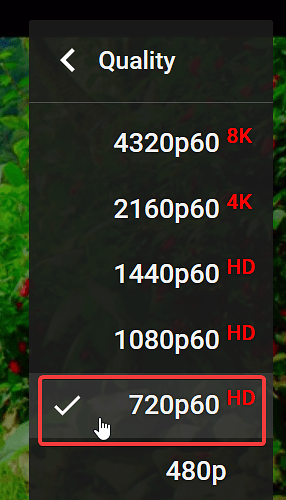

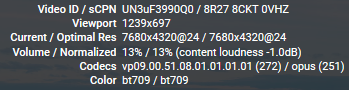

And 8k content as well.

I tried to prevent the “get better hardware” response before somebody made it, but I failed.

Hardware solves nothing. Humans are determined to want higher and higher quality regardless of how much hardware is given to them. We can’t buy our way past viewer expectations with hardware alone because viewers adapt too fast and then expect more before we can afford to provide it. Hence, proxies will be with us forever, which is why DaVinci Resolve and Premiere and FCP and even Kdenlive and Blender all support proxy workflows.

Another use for proxies is experimental videography like the recent trend of stitching three cameras together to get 12K footage. Here are two examples, neither of which could be edited in real-time and required hours of offline processing by the authors because no hardware-accelerated solution existed:

To my knowledge, there is only one commercially-available hardware configuration that can do 8K video editing in real-time, and it requires RTX video cards that have only existed for a few months. For any other configuration, proxies are required. Does this mean curious tech guys have to go home and wait for Christmas because hardware couldn’t support their vision? Nope. We work around it, in this case with proxies.

My point is that getting new hardware is a very expensive stop-gap solution because the next jump in resolution will obsolete it overnight. Proxies can extend the life of existing hardware by years with no loss in final quality. If someone does happen to be blessed with up-to-date hardware, proxies let them stack even more filters and layer more tracks than they could before. It’s always a win.

Now the million-dollar question is whether the higher resolution is worth it. The answer is situational of course. I could easily imagine the city planners of New York City wanting a 12K flyover in their archive for reference as the city expands and changes. For broadcast TV, 12K of course is absurd these days. For me, 4K is totally worth it for my delivery targets. A versatile video editor should be able to handle all of these situations.

In my case, the issue comes down to the camera hardware, interestingly enough. If a mirrorless camera that takes native 4K video is put into 1080p mode, it does one of two things: it either samples every other pixel horizontally and vertically to get a 1080p readout with no software scaling, or it attempts low-quality 4K-to-1080p software scaling to remain real-time and not generate too much heat or battery drain from processing. Both options are terrible. I encourage you to try this for yourself to see the difference… put a 4K video on a timeline and export it at 1080p with Lanczos scaling, and compare that to a native 1080p camera capture of the same thing. The difference is night and day.

If you want to get super technical about it, this can be compared to oversamping in the audio world, where 44.1 kHz was chosen for audio CDs because that’s the Nyquist frequency for human hearing (20 kHz plus buffer for aliasing filters). In the same way, 4K is the Nyquist of 1080p, and the results speak for themselves. So for me, 4K is necessary because I want the best possible 1080p. For the theatrical guys, they’re all getting into 8K for the same reason… 8K is the Nyquist of 4K, and the streaming providers like Amazon and Netflix require that new material be delivered to their studios in 4K. That’s a contractual obligation. It is what it is regardless of perceived quality differences.

YouTube is only one of many delivery outlets. I currently target Blu-ray 2K and 4K, and local broadcast TV using 1080i downsampled from 4K. I would like to attempt a 4K DCP in my local cinema just because I’m psycho enough to enjoy the challenge. As for the hardware to make that all happen, yes I am short on cash because the cash went to camera gear instead of computer hardware, and no, I’m not offended by you wondering why I’m doing the crazy work-arounds I’m doing.  If it helps you pity me less, I’m using proxies at 960x540 which if you refer to the 4-panel Red Shift animation I sent earlier, has a very usable level of detail compared to the 384x216 mush. The 384x216 setting is for old laptops that are barely a step better than an abacus, and I sometimes use 384x216 if I need to stack 30+ tracks for an experiment.

If it helps you pity me less, I’m using proxies at 960x540 which if you refer to the 4-panel Red Shift animation I sent earlier, has a very usable level of detail compared to the 384x216 mush. The 384x216 setting is for old laptops that are barely a step better than an abacus, and I sometimes use 384x216 if I need to stack 30+ tracks for an experiment.

I already use Windows 7 and Linux Mint. I wouldn’t touch Mac with a 10-foot pole these days. A lot of studios have been migrating from Mac to PC since 2016 because the price-to-performance ratio was so much better with PC, and the arrival of DNxHR has sufficiently threatened the ProRes monopoly. There’s not much reason left to choose a Mac for studio work.

I’ll take your word for it if you can describe it in a single paragraph.

If the 4k → 1080 conversion is better than native 1080 then how is this accomplished? By skipping alternate pixels or with a “low-quality 4K-to-1080p software scaling” or some other magic? Or is the 1080 native better? Again, I’ll take your word for it.

The guys I know who do high-end stuff here in Hollywood aren’t skimping on hardware by continuing to use ancient PC hardware, that I know.

ISTR reading about an NHK study to determine the highest resolution that could be discerned by the human eye. I think it was 8k but I could be wrong. The question is how to distribute this to the masses.

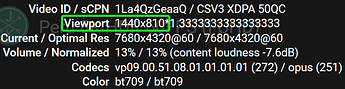

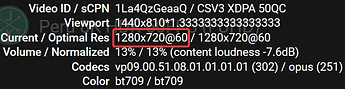

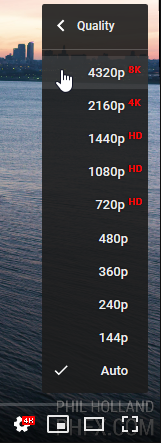

I downloaded "Peru 8K from YouTube

and MediaInfo reports the following:

Bit rate : 1 762 kb/s

Width : 1 280 pixels

Height : 720 pixels

Dash it all, I can’t watch it on YouTube natively. My 8K monitor and my 8K browser with HTML5 support are in the garage having the wheels aligned.