I get that some people figure 720 is SHD. But I just don’t want to put that out. OCD, over-thinking, whatever - it’s …um… how I roll.

I bounce back and forth between Resolve and Shotcut. Emotionally, I prefer Shotcut but I keep banging into what, IMNSHO, are significant problems. Being able to move a group of clips simultaneously is what started this discussion. The only way, in Shotcut, to move a large collection of clips that are settled (no changes remaining) is to render an intermediate version before continuing to work. Except, as this thread is all (mostly) about, there’s apparently, no smooth workflow to do this. If I knew I could do edits, render, edits, render, edits, then I could get on with my real job: producing a video.

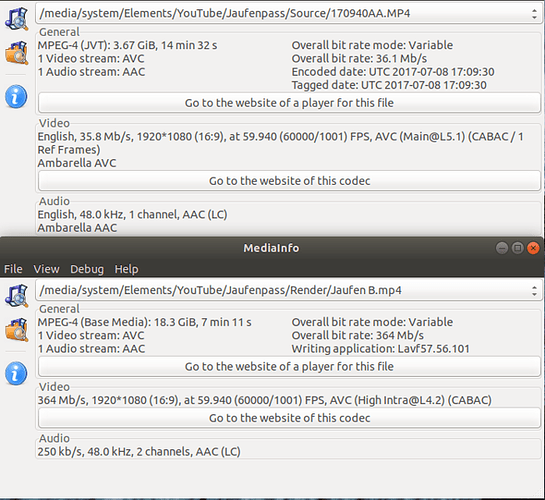

Right now I’ve got a timeline with about 15 edits in it (and they’re work-arounds for other missing features including keyframes). Editing a point in the middle of the timeline means either moving the 7-8 clips at the front or the 5 clips in the back, to say nothing of re-aligning the freeze-frame clips on V2. If there’s another way to do the work, I’m listening.

BTW, DNxHD 1080p 59.94 works better, for me, than ProRes. DN… has a very slight stutter in playback, where ProRes comes back to what’s reported in my initial post.

[code]General

Complete name : I:\YouTube\Jaufenpass\Render\Jaufen 01 DNxHD 1080p 5994.mov

Format : MPEG-4

Format profile : QuickTime

Codec ID : qt 0000.02 (qt )

File size : 5.46 GiB

Duration : 1 min 46 s

Overall bit rate mode : Constant

Overall bit rate : 442 Mb/s

Writing application : Lavf57.56.101

Video

ID : 1

Format : VC-3

Format version : Version 1

Format profile : HD@HQ

Codec ID : AVdn

Codec ID/Info : Avid DNxHD

Duration : 1 min 46 s

Bit rate mode : Constant

Bit rate : 440 Mb/s

Width : 1 920 pixels

Height : 1 080 pixels

Display aspect ratio : 16:9

Frame rate mode : Constant

Frame rate : 59.940 (60000/1001) FPS

Color space : YUV

Chroma subsampling : 4:2:2

Bit depth : 8 bits

Scan type : Progressive

Bits/(Pixel*Frame) : 3.540

Stream size : 5.44 GiB (100%)

Language : English

Audio

ID : 2

Format : PCM

Format settings : Little / Signed

Codec ID : sowt

Duration : 1 min 46 s

Bit rate mode : Constant

Bit rate : 1 536 kb/s

Channel(s) : 2 channels

Channel positions : Front: L R

Sampling rate : 48.0 kHz

Bit depth : 16 bits

Stream size : 19.4 MiB (0%)

Language : English

Default : Yes

Alternate group : 1

[/code]

[code]General

Complete name : I:\YouTube\Jaufenpass\Render\Jaufen 01 ProRes.mov

Format : MPEG-4

Format profile : QuickTime

Codec ID : qt 0000.02 (qt )

File size : 6.06 GiB

Duration : 1 min 46 s

Overall bit rate mode : Variable

Overall bit rate : 490 Mb/s

Writing application : Lavf57.56.101

Video

ID : 1

Format : ProRes

Format version : Version 0

Format profile : 422

Codec ID : apcn

Duration : 1 min 46 s

Bit rate mode : Variable

Bit rate : 489 Mb/s

Width : 1 920 pixels

Height : 1 080 pixels

Display aspect ratio : 16:9

Frame rate mode : Constant

Frame rate : 59.940 (60000/1001) FPS

Color space : YUV

Chroma subsampling : 4:2:2

Scan type : Progressive

Bits/(Pixel*Frame) : 3.931

Stream size : 6.04 GiB (100%)

Writing library : fmpg

Language : English

Matrix coefficients : BT.601

Audio

ID : 2

Format : PCM

Format settings : Little / Signed

Codec ID : sowt

Duration : 1 min 46 s

Bit rate mode : Constant

Bit rate : 1 536 kb/s

Channel(s) : 2 channels

Channel positions : Front: L R

Sampling rate : 48.0 kHz

Bit depth : 16 bits

Stream size : 19.4 MiB (0%)

Language : English

Default : Yes

Alternate group : 1

[/code]

The 50 MHz difference makes a big difference in playback.